Building a User Research Panel

In a previous post, Scaling UX Research for the Enterprise, I shared how our small-but-growing research team transitioned from operating as individual actors to establishing the tools and processes necessary to facilitate the rapid expansion of our team. Looking at our research lifecycle, we identified user recruitment as one of the most time-consuming and yet most easily automated aspects of our work.

While we each had our own spreadsheets of participant lists that we had cobbled together over time, we had no way of fully leveraging each other’s lists or knowing if our lists contained duplicates — much less controlling for over-recruiting. Nor did we have an effective “official” mechanism for recruiting new users to participate in our research activities. Recruiting B2B users is expensive, difficult, and extremely time-consuming, and this is particularly true for users in the legal domain.

It was time to create order — both for ourselves and for our users. Enter… the user research panel.

Getting started

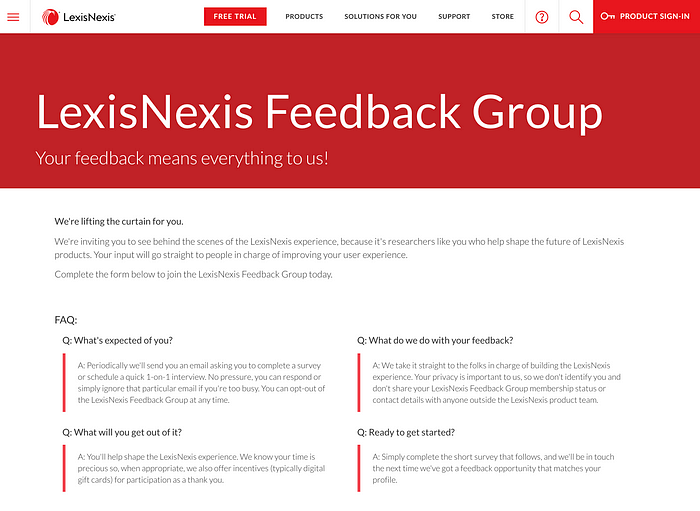

While there are several vendors who will provide recruiting and panel services, we chose to build our own for several reasons. First and foremost, our goal with the panel was to identify users who genuinely wanted to partner with us to build better products. Even though we provide incentives to participate in our research studies, our desire is to recruit individuals for whom the incentive is a token of gratitude, not their primary reason for participating in the research. Also, third-party solutions often have difficulty sourcing the niche audiences that we need to recruit, and we feared that the individuals in those panels wouldn’t have enough of a connection to our products to provide the types of feedback that we required.

Second, since we already had an established customer base, we knew that we could leverage our customer-facing colleagues to recruit panelists — which would be more cost-effective and allow us greater control.

Last, we needed to require panelists to sign our non-disclosure agreement (and trust that they would comply with it).

Conduct an inventory

Since we had been conducting research and had several different participant lists, our first step was to conduct an inventory of all those lists. We assigned one team member to take each of the lists, de-dup them, and determine what shared information we had about our users. This allowed us to identify where we had gaps — both in terms of the types of users / personas that we needed more of and in terms of the profile data we needed to know about our users to recruit effectively.

Define the profile survey

In this step we identified the core set of information that we wanted to know about each of our panel members so that we could have a consistent set of profile information to be able to recruit from. This short profile survey would become the instrument for signing up to be a part of our panel. Our goal was to create a survey that users could complete in about five minutes, so we needed to be judicious about how much information we could ask for in this initial survey.

Again, because we were building a single global panel to include users from all of our products, we needed to define a single profile survey that we could use across all of our users — from attorneys to competitive intelligence professionals to graduate students to marketing professionals. This required a bit of conversation and compromise, but by establishing a couple of ground rules and some simple survey logic, we were able to create a single profile survey that works for any user, regardless of who they are or where they work.

Rule #1 — Identify essential screen in / out data.

Even though the goal of the panel was to recruit in as many users as possible, there were still certain constraints around the optimal pool of panelists. Defining those hard lines allowed us to identify the initial screening questions that had to be a part of our panel profile survey.

For example, due to the varied privacy laws across countries, we decided to screen in users only from the countries for which we had clearly defined and approved processes. While there is a trade-off (it makes extending our panel to other countries a bit slower and more labor-intensive), it provides us the ability to know that we are in compliance with the laws of the country and the users’ wishes for their data.

We also chose to screen out individuals who are employed by our primary competitors so as to not inadvertently share confidential information with them. Additionally, panelists are required to agree to the terms of our non-disclosure agreement; failure to agree to those terms screens the individual out of the panel.

Rule #2 — Include basic screening data ONLY.

Once we determined our screening questions, we could then focus on the data we wanted to collect from each panelist. This was a bit more difficult because each team member had their own set of desired profile questions. This required the research team to engage in some conversation and compromise. We found that if we focused on identifying the most essential data required for recruiting for future studies, we could differentiate between questions to keep in and questions to toss out. Any of these follow-up studies might still require additional screening questions, but the initial profile survey would allow us to do a first pass at narrowing our audience. (And, any additional data we collect in a follow-up screener gets amended to the user’s profile for use the next time around.)

Rule #3 — Assume the ability to grow the user’s profile over time.

It was much easier to decide which profile questions to keep and which to defer when we kept in mind that we didn’t have to capture everything about the user in the profile survey. The whole point of setting up the panel was to be able to build an ongoing relationship with our panelists and continue to grow our profile data over time. Knowing that this wasn’t our one-and-only opportunity to collect information about our users enabled the team to distinguish between “must have” and “nice to have” data.

Choose a tool

Of course, all of this user data needed to reside somewhere… and we knew that the “somewhere” needed to be an enterprise solution that was GDPR compliant. It needed the ability to collect profile data via a survey and write that to users’ profiles, and then be able to include that profile information in the responses of any future research activities. It needed to be able to track and limit how frequently we contacted any individual user, and it needed basic e-newsletter type functionality (e.g. email to distributions without triggering spam filters, easy opt-out functionality, etc.).

There are many tools out there that meet all of these needs — some of which were built precisely for the purpose of providing a user research panel framework. Being a part of a large enterprise organization and knowing that we needed to act quickly, our choices were somewhat limited to the tools that we already had a master service agreement with. After reviewing a set of tools that met this additional criteria, we decided to use Qualtrics to host our panel.

(In an upcoming post I’ll discuss how we’ve leverage Qualtric’s functionality to support a variety of different research methods, including discussion guides for user interviews.)

Establish panel governance

A primary purpose in setting up the panel was to provide more control around our recruitment processes, including contact rates, data storage and sharing, and incentive distributions. Our team established the initial governance model, but as the panel has grown — and as our team has grown — we’ve had to make small adjustments. The key to success has been to work collectively as a team to define a set of rules that allow us each to achieve our goals, while always prioritizing the user.

Recruit users

Once all of our tooling, processes, and governance was in place, it was time to start recruiting. Since we already had lists of previous research participants, we began with a small group of “friendlies” as our first group to invite into the panel. We provided them with a description of what we were doing and let them know that they were early invitees and to send us any feedback they had about the process and survey. Over the next month we slowly continued to send invitations to small groups of users, pausing after each to evaluate whether we needed to make any changes. Once we felt confident that everything was ready for prime-time, we sent invitations to the remaining users.

Keepin’ it fresh

Of course, building and populating the initial panel was only the beginning of our new ResearchOps adventure… as with any user group, there’s bound to be attrition and gaps. After taking a few weeks to celebrate our success, we buckled down and identified our longer-term needs and priorities, including:

- the need for ongoing recruiting efforts

- ongoing improvements to the profile survey

- expansion to other countries and audiences

- identifying processes for removing low quality panelists

- identifying processes for re-profiling existing panelists

The case to hire a dedicated ResearchOps role

Pretty quickly it became apparent that we’d built an incredible tool… but one that would require more care and feeding than we researchers could keep up with (and also conduct our research). We leveraged best practices from the ResearchOps community to define a job role and skill set that would provide the operations backbone to our research team, enabling each of the UX researchers to focus even more of their energy on their craft.

Having a user research panel has been truly revolutionary for our team. The panel has not only allowed us to be more efficient, but it’s also lent legitimacy to our team and to our work. We’ve been able to form new collaborations within the company and seamlessly weather GDPR and CCPA.

At the time that we launched the panel we were a team of five. We began small, with a panel of about 200 legal professionals in the United States. Our vision was to ultimately create a user panel that would support our UX research activities around the globe and across all of our products. A year later we are well on our way, having dramatically expanded the panel across three different countries and covering several different audiences.